Artificial intelligence isn’t just a niche tool for cheating on homework or generating bizarre and deceptive images. It’s already humming along in unseen and unregulated ways that are touching millions of Americans who may never have heard of ChatGPT, Bard or other buzzwords.

A growing share of businesses, schools, and medical professionals have quietly embraced generative AI, and there’s really no going back. It is being used to screen job candidates, tutor kids, buy a home and dole out medical advice.

The Biden administration is trying to marshal federal agencies to assess what kind of rules make sense for the technology. But lawmakers in Washington, state capitals and city halls have been slow to figure out how to protect people’s privacy and guard against echoing the human biases baked into much of the data AIs are trained on.

“There are things that we can use AI for that will really benefit people, but there are lots of ways that AI can harm people and perpetuate inequalities and discrimination that we’ve seen for our entire history,” said Lisa Rice, president and CEO of the National Fair Housing Alliance.

While key federal regulators have said decades-old anti-discrimination laws and other protections can be used to police some aspects of artificial intelligence, Congress has struggled to advance proposals for new licensing and liability systems for AI models and requirements focused on transparency and kids’ safety.

“The average layperson out there doesn’t know what are the boundaries of this technology?” said Apostol Vassilev, a research team supervisor focusing on AI at the National Institute of Standards and Technology. “What are the possible avenues for failure and how these failures may actually affect your life?”

Here’s how AI is already affecting …

How people get a job…

AI has already influenced hiring, particularly at employers using a video interview tool.

Hiring technology these days isn’t just screening for keywords on resumes — it’s using powerful language processing tools to analyze candidates’ answers to written and video interview questions, said Lindsey Zuloaga, chief data scientist at HireVue, an AI-driven human resources company known for its video interviewing tools.

There may come a point when human interviewers will become obsolete for some types of jobs, especially in high turnover industries like fast food, Zuloaga said.

The Equal Employment Opportunity Commission, the small agency tasked with enforcing workplace civil rights, is closely monitoring developments in the technology. It has issued guidance clarifying that anti-discrimination laws still apply — and employers can still be held liable for violations — even when AIs assist in decision making. It hasn’t put out new regulations, however.

New York last year implemented a mandate of transparency and annual audits for potential bias in automated hiring decisions, though the shape of those reviews is still being carved out.

EEOC Chair Charlotte Burrows previously told POLITICO that rulemaking at her agency was still under consideration; however, Republican member Keith Sonderling has said issues such as AI hiring disclosures may be left up to employers and state and local governments.

The agency’s general counsel, Karla Gilbride, told reporters in January that her office is looking specifically for cases of AI discrimination as part of its enforcement plan for the next several years. Citing the agency’s previous guidance, she said potential areas of risk include AI that discriminates against job applicants with disabilities.

“It is a developing landscape,” Gilbride said. “It is something that not just the EEOC, but I think the nation and the world as a whole is still sort of adjusting to.”

… and keep it.

While generative AI has been heralded as a sophisticated assistant to many types of workers, labor leaders have grown alarmed about whether it quickly becomes a tool for invasive surveillance of workers’ productivity.

Another is whether employers might wield an algorithm to overrule the on-the-ground judgment their workers have. Hotels are already seeing technology used in this way, AFL-CIO President Liz Shuler said in an interview: Cleaning staff might want to tackle rooms in order, while an algorithm could tell them to “ping pong back and forth across floors” to prioritize higher-paying clients.

A government regulator on new AI technology — similar to the FDA for food and drugs — could be a partial solution, Shuler said. Federal support for worker training and re-training would also be helpful, she said.

Biden’s Labor Department has expressed it wants to steer AI development in a way that takes worker voices into account.

“If we continue to go down a path in which we don’t have guardrails, in which we don’t prioritize the well-being of working people then we don’t want to look back and say, what happened?” acting Labor Secretary Julie Su said.

How kids are being educated…

While ChatGPT has roiled academia with concerns about cheating and how to police it, AI is starting to assist K-12 students and teachers with everyday tasks and learning.

Some schools are using the technology to advance student achievement through AI-powered tutoring. Districts including Newark Public Schools in New Jersey are piloting Khan Academy’s AI chatbot Khanmigo, for instance.

Other school districts are using AI to enhance student creativity. In Maricopa Unified School District south of Phoenix, Arizona, some schools are using Canva and other AI platforms to generate images to assist with art projects and edit photos.

“The idea is that they’re using it to create,” said Christine Dickinson, technology director at the Maricopa district. “I know that they’re using it at home quite a bit, but our teachers are also being very mindful of the tools that they do have in place to ensure all of the students are, again, creating their own outcomes and not using it just for a replacement for the actual product.”

…at the discretion of districts.

Schools are looking to their state governments and the support of education groups for guidance on how to best use artificial intelligence in the classroom as they navigate a host of privacy and equity dilemmas the technology could create.

Ultimately, decisions on how AI will be used in the classroom will be left up to school districts. But federal, state and local officials have been clear about the need for AI to simply assist with teaching and learning and not replace educators.

“A number of districts and educators were curious very early on on how are we going to wrap our arms around this both as an opportunity but as a caution,” Oregon’s Education Department Director Charlene Williams said about artificial intelligence. But “nothing is ever going to replace a teacher and the importance of having adult oversight,” she said.

How homebuyers navigate the real estate market…

Because AI is good at complex data analysis, it has the potential to revamp the housing industry and — similar to hiring — also enshrine human biases that have shaped American homebuying for generations.

Zillow points to its “Zestimate” tool, which calculates the value of a home, as an example of how artificial intelligence can arm home buyers and sellers with more information as they navigate a complicated financial decision.

“We’re using AI to understand user preferences and help them find the right home,” said Ondrej Linda, director of applied science at Zillow. “How do we help people envision themselves in a home? AI is making it easier to understand real estate, make the right decision and plan ahead.”

But algorithms are not impartial, civil rights groups warn: They’ve been fed data from a society where the legacy of redlining — the racist practice of using government-issued maps to discourage banks from lending in Black neighborhoods — is reflected in information about school, home and grocery store locations decades after the practice was officially outlawed.

Rice, of the National Fair Housing Alliance, said she is concerned that tenant screening selection models incorporating AI make it harder for consumers to probe a decision that might deny them housing.

“There’s very little transparency behind these systems, and so people are being shut out of housing opportunities and they don’t know why,” Rice said.

… as new tools draw scrutiny from the feds.

Algorithms have already run afoul of federal fair housing law, according to a Department of Housing and Urban Development investigation.

Meta in 2022 settled with the Justice Department after HUD alleged that Facebook algorithms violated the law by allowing advertisers to steer housing ads to users based on race, gender and other factors. The company, which did not admit to any wrongdoing as part of the settlement, agreed to discontinue the advertising tool and overhaul its housing ad delivery methods as part of the settlement.

Housing is one area where federal agencies have taken the issue of AI rulemaking head-on. Wary of artificial intelligence’s capacity to codify human bias, the Consumer Financial Protection Bureau, the Federal Housing Finance Agency, and other regulators proposed a rule in June to police the way AI is used in home value appraisals.

Then in September, the CFPB issued guidance detailing the steps lenders must take to ensure that they identify “specific and accurate reasons” for denying someone credit when relying on AI or other complex algorithms.

And President Joe Biden’s October executive order directed the Federal Housing Finance Agency to consider requiring federal mortgage financiers to evaluate their underwriting models for discrimination and use automation in a way that minimizes bias.

How doctors interact with patients…

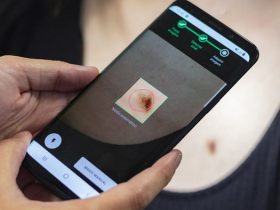

AI is increasingly becoming a co-pilot for doctors across the country.

When a patient gets an X-ray, AI systems are now assisting physicians in deciphering the images — and some can do it as well as a human, according to recent studies. And they’re already being put to work to measure and improve the performance of radiologists.

Beyond imaging, new AI systems are helping doctors make sense of their notes, conversations with patients and test results. They’re being used to offer physicians probable causes for a patient’s ailments even though the tech sometimes sidesteps the government rules that scrutinize new medical devices and drugs.

UCLA Medical Center oncologist Wayne Brisbane uses artificial intelligence to help predict how prostate cancer is manifesting inside of someone’s body.

“Prostate cancer is a tricky disease,” he said in an interview. The tumors grow in multiple locations and extend in ways that may not get picked up on imaging.

“It’s like an octopus or crab. There’s a big body, but it’s got these little tentacles that extend out. And so figuring out the margins of where you’re going to do this lumpectomy or treatment can be difficult,” Brisbane said.

AI makes surgical planning a lot easier, he said.

The Food and Drug Administration has taken an experimental approach to regulating artificial intelligence in medical devices, mostly issuing strategic plans and policy guidance.

The biggest challenge with regulating AI is that it evolves over time and companies don’t want to re-apply for FDA clearance to market their technology. As a result, in April 2023, FDA published draft guidance telling companies how to disclose planned updates to their algorithms in their initial applications. So far, the agency has cleared nearly 700 such devices.

Meanwhile, the Department of Health and Human Services’ Office of the National Coordinator issued a data transparency rule late last year requiring AI developers to provide a certain level of data transparency with health systems so that they can ensure the technology is safe and fair.

Biden’s executive order on AI also charges HHS with creating an AI taskforce, safety program, and an assurance policy.

… and who pays the bill.

Even more so than practicing medicine, AI is making inroads in digesting the paperwork that has come to define the $4 trillion in medical bills Americans accrue each year.

Companies are experimenting with new tools that could revolutionize the way bills are generated and processed between care providers and insurers. Across the sector, huge sums of money are at stake — not only because of the potential labor and time savings, but also because the tech can scrutinize bills more efficiently and is building a record of boosting revenue.

Advocates of the technology say it means patients will face shorter processing time on claims, making care more accessible.

But skeptics worry AI could reduce transparency and accountability in decision-making across providers and insurers alike.

UnitedHealthcare and Cigna are both facing lawsuits accusing the companies of using artificial intelligence and other sophisticated formulas to systematically deny care.

The complaint against Cigna alleges the company used an algorithm to flag any patient care that wasn’t on its list of acceptable treatments for the diagnosis. The algorithm would adjudicate patient claims and a doctor would review and sign that finding, spending as little as one second on such rejections, according to reporting from ProPublica.

UnitedHealthcare is being accused of using a proprietary algorithm to shortchange patients on care. The suit also asserts that the algorithm has a 90 percent error rate — a claim that, if true, has profound implications for the 52.9 million Americans covered by United.

Cigna issued a statement saying its technology is not AI and is only used on low-cost claims. It also said it does not deny care because the review takes place after treatment, and most claimants don’t have to pay additional costs even if a claim is denied.

UnitedHealth Group also said its naviHealth predict tool is not generative AI nor used to make coverage determinations. “Adverse coverage decisions are made by physician medical directors and are consistent with Medicare coverage criteria for Medical Advantage Plans,” a company spokesperson said in a statement.

But just like in housing, there is also concern that algorithms may not treat people equally. New York City launched a Coalition to End Racism in Clinical Algorithms in 2021 to get health systems to stop using biased methodologies since AI that uses data sets with insufficient diversity can deliver skewed results.

However, across industries, efforts to reduce an AI’s bias by removing certain information may also make those systems less robust, NIST’s Vassilev said.

Determining the right balance can also depend on the industry, he said — a scale that, at the moment, tips without government intervention.

“You cannot have it all,” he said. “You have to make a compromise and learn to live with a budget of risk on any one of these avenues.”

©️politico.com

Leave a Reply