In the ever-evolving landscape of artificial intelligence, Microsoft Research has unveiled a tantalizing glimpse into the future with VASA-1. This groundbreaking research promises to revolutionize the way we interact with virtual characters by enabling the creation of hyper-realistic talking faces from a single portrait photo and an audio file.

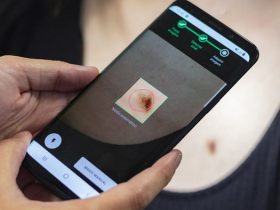

Imagine uploading a photo of yourself and a snippet of your voice, only to see a lifelike animated version of your face speaking in perfect synchronization with the audio. VASA-1 accomplishes just that, generating videos with seamless lip sync, realistic facial features, and natural head movements. The result is nothing short of astonishing, with a level of realism that surpasses existing technologies.

While similar lip sync and head movement technologies exist, VASA-1 sets itself apart with its unparalleled quality and realism. Gone are the days of noticeable mouth artifacts; instead, VASA-1 produces smooth and natural movements that mimic real-life speech patterns.

What sets VASA-1 apart is its ability to work with a variety of image styles, not just face-forward portraits. This versatility opens up a world of possibilities, from enhancing immersion in gaming with lifelike NPCs to creating virtual avatars for social media videos.

The implications of VASA-1 extend beyond entertainment. Imagine the potential for AI-driven movie making, where realistic virtual singers could bring music videos to life in ways never before possible. Furthermore, the ability of VASA-1 to perfectly lip-sync to songs, even without music in the training dataset, showcases its adaptability and potential for widespread application.

Under the hood, VASA-1 operates efficiently, generating 512×512 pixel images at 45 frames per second in just two minutes using a desktop-grade Nvidia RTX 4090 GPU. While currently a research preview with no plans for public release, the prospect of integrating VASA-1 into developer tools like Runway or Pika Labs is tantalizing.

It’s not difficult to envision a future where VASA-1 becomes a cornerstone of AI-driven creativity, seamlessly blending the realms of technology and artistry. With Microsoft’s extensive involvement in OpenAI, the potential for integration with future projects like Copilot Sora is both exciting and promising.

As we eagerly anticipate the next steps in this remarkable journey, one thing is clear: VASA-1 represents a significant leap forward in the realm of AI-driven animation, offering a glimpse into a future where the line between reality and virtuality continues to blur.

Leave a Reply